Welcome to the world of synthetic data creation! In today’s data-driven era, businesses are constantly seeking innovative solutions to address the challenges of data privacy, security, and scarcity. That’s where synthetic data comes into play. Synthetic data refers to artificially generated data that mimics the characteristics and patterns of real data, while ensuring the protection of sensitive information.

By creating synthetic data, businesses can unlock a myriad of possibilities, from improving machine learning algorithms and training models to conducting robust testing and analysis. This article will guide you through the process of creating synthetic data, discussing its benefits, techniques, and best practices. Whether you’re an data scientist, researcher, or developer, get ready to dive into the exciting world of synthetic data creation and harness its immense potential.

Inside This Article

- Overview of Synthetic Data

- Importance of Synthetic Data in Data Science

- Methods for Generating Synthetic Data

- Evaluating the Quality of Synthetic Data

- Conclusion

- FAQs

Overview of Synthetic Data

Data plays a crucial role in the world of data science. It helps businesses make informed decisions, develop innovative products, and predict market trends. However, acquiring real-world data can be challenging due to privacy concerns, data availability, or cost constraints. That’s where synthetic data comes into play.

Synthetic data is artificially generated data that mimics the characteristics of real-world data. It is created using algorithms and statistical models, allowing businesses and researchers to work with realistic datasets without compromising sensitive information. With synthetic data, organizations can perform various analyses, build models, and develop algorithms without accessing or exposing real data.

The creation of synthetic data involves replicating the statistical properties, patterns, and dependencies found in real data. This ensures that the synthetic data closely resembles the original dataset, capturing its essential features and distributions. By generating synthetic data, data scientists and analysts can overcome the limitations associated with real data and explore different scenarios and possibilities.

Synthetic data can be used in a wide range of applications. In healthcare, it can be utilized to develop predictive models for diagnosing diseases and assessing patient outcomes. In finance, synthetic data can aid in detecting fraudulent transactions and analyzing market behavior. It can also be employed in training machine learning algorithms, as it provides a large and diverse dataset for model optimization.

One of the significant advantages of using synthetic data is the preservation of privacy and data security. As it mimics real data without disclosing any personal or sensitive information, synthetic data can be freely shared and used without privacy concerns. This makes it particularly valuable for collaboration between organizations, research studies, and data sharing initiatives.

Despite its benefits, it is important to note that synthetic data is not a perfect substitute for real data. While it captures the statistical properties, it might not reflect certain nuances, biases, or complexities present in the real-world. It is crucial to carefully evaluate the quality and validity of the synthetic data before using it for analysis or modeling.

Importance of Synthetic Data in Data Science

Data science has become a vital component in various industries, helping businesses make informed decisions, develop innovative products, and gain a competitive edge. However, one of the major challenges faced by data scientists is the availability of quality data for analysis. This is where synthetic data comes into play. Synthetic data holds immense importance in the field of data science as it offers several benefits that help overcome limitations associated with real-world data.

One of the primary advantages of synthetic data is the ability to address privacy concerns. In many cases, data used for analysis contains sensitive information, such as personally identifiable information (PII). It can be challenging to securely handle and share this data with multiple stakeholders. Synthetic data allows data scientists to generate artificial datasets that retain the statistical properties of the original data, but without any real PII, ensuring privacy and compliance.

Moreover, synthetic data also helps to overcome issues related to data scarcity. In certain industries or domains, collecting a large amount of data can be time-consuming and costly. Synthetic data can be generated in abundance, replicating the characteristics and patterns of the original data. This not only saves time but also provides data scientists with a diverse set of data to work with, enabling them to train more robust machine learning models.

An interesting aspect of synthetic data is its ability to simulate different scenarios and edge cases. Real-world data might not always represent all possible scenarios or outliers due to limitations in data collection. Synthetic data allows data scientists to create artificial instances that cover a wide range of situations, enabling them to perform robust testing and analysis on their models.

Synthetic data also plays a pivotal role in data augmentation. Augmenting the existing dataset with synthetic samples can improve the performance and generalization capability of machine learning models. By introducing synthetic data that encapsulates different variations and patterns, the model becomes more resilient to unseen data during deployment.

Furthermore, synthetic data can be shared and exchanged more freely, as it doesn’t contain any sensitive or real data. This facilitates collaboration between different organizations and researchers, leading to improved research outcomes and advancements in the field of data science.

Methods for Generating Synthetic Data

Generating synthetic data is an essential process in data science, especially when working with sensitive or limited data. Synthetic data refers to artificially generated data that closely mimics the characteristics and statistical properties of real data. There are several methods available to generate synthetic data, each with its own advantages and limitations. Let’s explore some of the commonly used methods:

1. Rule-based synthesis: This method involves creating rules or constraints to generate synthetic data that adheres to the same patterns and relationships as the original data. It can be used when the underlying data distribution is well-known and can be accurately represented using defined rules.

2. Data augmentation: Data augmentation involves applying a set of transformations to the existing data to create new synthetic samples. Common techniques include rotation, translation, scaling, and adding noise to the data. This method is particularly useful when working with image or text data.

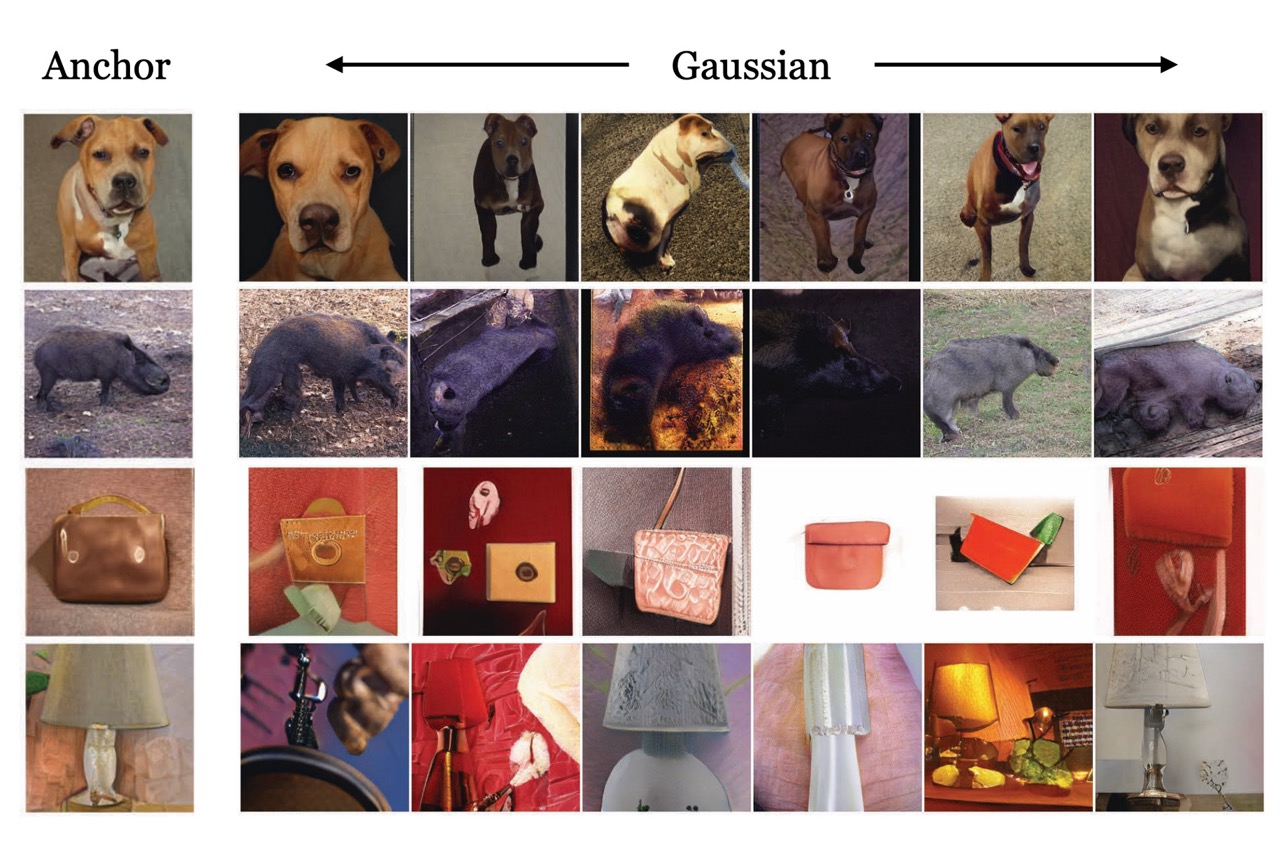

3. Generative Adversarial Networks (GANs): GANs are a popular deep learning technique used for generating synthetic data. GANs consist of two neural networks – a generator and a discriminator. The generator network learns to generate synthetic data that is similar to the real data, while the discriminator network learns to distinguish between the real and synthetic data. GANs have been successful in generating realistic images, text, and even tabular data.

4. Monte Carlo simulations: Monte Carlo simulation is a statistical method that uses random sampling techniques to generate synthetic data based on given probability distributions. This method is particularly useful when dealing with complex systems where it may be challenging to model the underlying processes explicitly.

5. PrivBayes: PrivBayes is a privacy-preserving data publishing algorithm that generates synthetic data while ensuring the protection of sensitive information. It uses a Bayesian network structure to model the relationships between attributes and generates synthetic data that preserves the overall data distribution while adding noise to protect individual privacy.

6. Variational Autoencoders (VAEs): VAEs are another type of generative model that can be used to generate synthetic data. VAEs are neural network architectures that learn a compressed representation of the input data and can generate new samples by sampling from the learned latent space. VAEs have been successful in generating diverse and high-quality synthetic data across various domains.

It is important to note that the choice of the method for generating synthetic data depends on the nature of the data, the desired level of realism, and the specific requirements of the problem at hand. Evaluating the quality of the synthetic data is crucial to ensure its reliability and applicability in downstream analysis. Let’s explore more about evaluating the quality of synthetic data in the next section.

Evaluating the Quality of Synthetic Data

When it comes to using synthetic data for various data science applications, one crucial aspect that needs to be considered is the quality of the generated data. Evaluating the quality of synthetic data is essential to ensure its reliability and usability in real-world scenarios.

Here are some key factors to consider for evaluating the quality of synthetic data:

- Data Distribution: The synthetic data should accurately represent the underlying data distribution. It should mimic the statistical properties, such as means, variances, and correlations, of the original data.

- Feature Importance: The synthetic data should preserve the importance of different features present in the original data. This means that any significant patterns or relationships between features should be retained in the generated data.

- Data Diversity: The synthetic data should exhibit similar diversity as the original data. It should capture the range of values and variations observed in the real-world data to ensure its applicability across different scenarios.

- Data Consistency: The synthetic data should be consistent with the constraints and relationships present in the original data. For example, if there are certain logical constraints between different features, the generated data should adhere to those constraints.

- Data Privacy and Security: If the original data contains sensitive or private information, it is crucial to ensure that the generated synthetic data does not reveal any personally identifiable information (PII) or compromise the security of individuals.

- Data Utility: The synthetic data should be useful for the intended purpose. It should effectively capture the key characteristics and patterns of the original data that are relevant for the specific data science task.

Evaluating the quality of synthetic data is not a straightforward task. It often requires a combination of statistical analysis, visual inspection, and validation against real-world benchmarks. It is important to establish robust evaluation metrics and benchmarks to assess the performance and effectiveness of different synthetic data generation techniques.

By carefully evaluating the quality of synthetic data, data scientists can ensure that the generated data is reliable and fit for purpose. It enables the use of synthetic data in various applications such as data analysis, model training, and algorithm development, facilitating the advancement of data science in a range of industries and domains.

Conclusion

Creating synthetic data is a powerful technique that offers numerous benefits in various fields. Whether you are in the medical field, finance industry, or performing research, synthetic data can help you overcome challenges related to data privacy, limited data availability, and costly data collection.

By generating artificial datasets that mimic real-world data, you can develop and refine algorithms, perform accurate simulations, and identify trends and patterns without the risk of exposing sensitive information.

Additionally, synthetic data allows for easier sharing and collaboration between researchers, enabling the development of more robust models and fostering innovation. It offers a cost-effective and efficient solution in scenarios where acquiring real data is impractical or time-consuming.

Overall, the use of synthetic data opens up a world of possibilities. Embracing this technique can revolutionize the way we approach data-driven projects, ensuring the privacy and security of individuals while enabling progress and discovery.

FAQs

1. What is synthetic data?

Synthetic data refers to artificially generated data that mimics the characteristics of real-world data. It is created using algorithms and statistical models to replicate the patterns, distributions, and structures found in actual data. Synthetic data is often used in situations where real data is limited, sensitive, or unavailable.

2. Why would someone create synthetic data?

There are several reasons why someone would create synthetic data. It can be used for testing and development purposes, allowing organizations to simulate real-world scenarios without exposing sensitive or private information. Synthetic data can also be utilized for research, machine learning training, and data analysis, offering a privacy-preserving alternative to using real data.

3. How is synthetic data generated?

Synthetic data is generated by applying mathematical algorithms and statistical models to existing datasets. These algorithms analyze the underlying patterns, correlations, and distributions of the original data and generate new data points that closely resemble the original dataset. Various techniques like Monte Carlo simulations, generative adversarial networks (GANs), and differential privacy methods are commonly employed for creating synthetic data.

4. Is synthetic data as good as real data?

While synthetic data can mimic the statistical properties of real data, it is important to note that it is not a perfect substitute for real data. Synthetic data aims to capture the essential characteristics of the original data, but it may not fully replicate complex relationships or capture the full spectrum of real-world variability. Therefore, it is crucial to carefully validate and assess the quality and representativeness of synthetic data before using it in any analysis or application.

5. Is synthetic data legal and ethical to use?

The use of synthetic data raises legal and ethical considerations. In many cases, synthetic data is used as a privacy-preserving alternative to actual data, especially when dealing with sensitive or personally identifiable information. However, the legality of using synthetic data varies depending on the jurisdiction and the specific use case. Organizations must ensure compliance with relevant data protection and privacy regulations when generating and using synthetic data to avoid any legal or ethical issues.