Apple has recently unveiled its latest set of accessibility features, aimed at making the user experience more inclusive and convenient for individuals with disabilities. These new features, including Live Captions, Door Detection, and much more, demonstrate Apple’s ongoing commitment to accessibility and innovation.

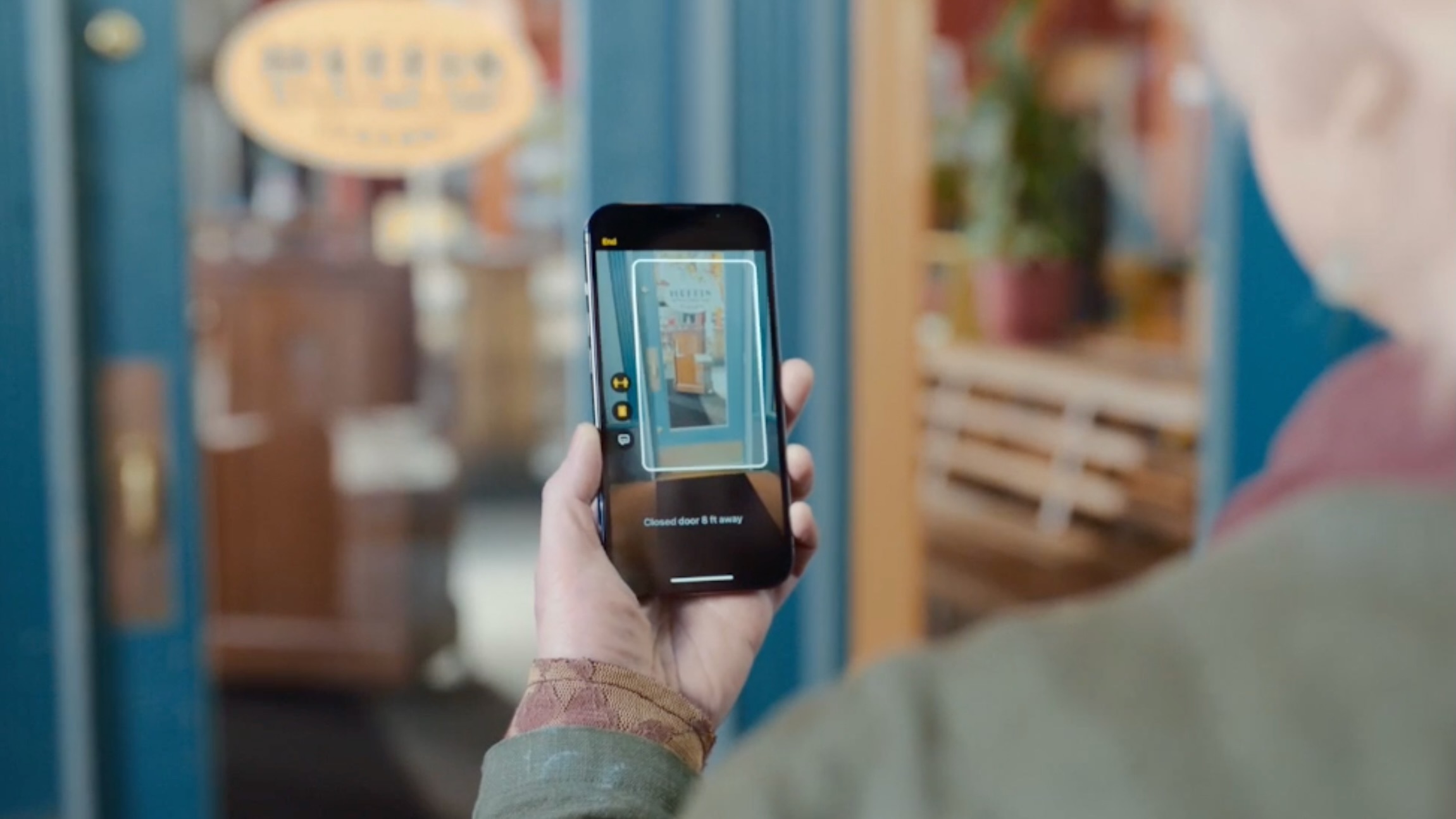

With Live Captions, users can now enjoy real-time captions for any audio or video content, making it easier for individuals with hearing impairments to follow along. The Door Detection feature utilizes the iPhone’s camera to detect and highlight doors in the physical environment, assisting users with visual impairments in navigating their surroundings.

These new accessibility features provide a glimpse into the future of technology, where inclusivity and usability go hand in hand. In this article, we will delve into the details of these exciting features, exploring their benefits and how they enhance the overall experience for those who rely on assistive technologies.

Inside This Article

- Live Captions

- Door Detection

- Eye Tracking Enhancements

- AssistiveTouch for Apple Watch

- Conclusion

- FAQs

Live Captions

Apple’s latest software update brings a host of new accessibility features to enhance the user experience. One of the standout features is Live Captions, a feature that enables automatic captions for any audio or video content on your iPhone or iPad. Whether you are watching a video, listening to a podcast, or taking a phone call, Live Captions will generate real-time captions that appear on your screen, allowing you to follow along with the content even if you have difficulty hearing or want to watch without sound.

Live Captions uses advanced speech recognition technology to transcribe spoken words into text. The captions are displayed in a non-intrusive manner, allowing you to continue enjoying the content without any distractions. This feature not only benefits individuals with hearing impairments, but it can also be useful in noisy environments or when you want to watch videos discreetly in public places.

To enable Live Captions on your device, go to the Accessibility settings and select “Live Captions.” From there, you can toggle the feature on or off. Keep in mind that Live Captions work offline and do not require an internet connection, ensuring that you can enjoy the feature wherever you are.

The Live Captions feature is a game-changer in terms of accessibility. It opens up a world of content for individuals who may have previously struggled to access audio or video material. Whether it’s watching a movie, attending a virtual meeting, or enjoying a podcast, Live Captions empowers everyone to fully participate and engage with the content.

Door Detection

One of the new accessibility features announced by Apple is Door Detection. This innovative feature is designed to assist individuals with visual impairments, providing them with real-time information about the presence of doors in their surroundings.

The Door Detection feature utilizes the powerful camera capabilities of iOS devices to detect and identify doors accurately. By leveraging advanced computer vision algorithms, the device can recognize the shape and structure of a door, alerting the user through audio cues or vibrations.

This functionality proves particularly beneficial for individuals with low vision or blindness, as it allows them to navigate unfamiliar environments more confidently. Whether they are in a public building, office space, or someone’s home, Door Detection can help them identify and locate entrances and exits with ease.

Apple’s commitment to accessibility is evident with the inclusion of features like Door Detection. By leveraging technological advancements, the company aims to empower individuals with disabilities and ensure equal accessibility for all.

Furthermore, Door Detection is customizable to suit the user’s preferences. Users can choose between different types of alerts, such as sound or haptic feedback, based on their individual needs and sensory preferences.

To enable Door Detection, users can simply go to the Accessibility settings on their iOS device and select the option. The device’s built-in camera will then be utilized to detect and notify the user about the presence of doors in their surroundings.

This new accessibility feature from Apple demonstrates the company’s ongoing commitment to enhancing the lives of individuals with disabilities. By introducing Door Detection, Apple continues to bridge the accessibility gap, making technology more inclusive and empowering for users of all abilities.

Eye Tracking Enhancements

Apple’s latest accessibility feature update includes exciting enhancements for eye tracking technology. The advancements in this area aim to provide a more inclusive and seamless experience for users with mobility impairments. By leveraging the power of advanced machine learning and gaze detection algorithms, Apple has made significant strides in improving eye tracking capabilities on its devices.

Eye tracking allows users to control their devices simply by moving their eyes. This technology tracks the movement of the user’s gaze on the screen, enabling them to navigate menus, select options, and interact with applications without the need for physical input. It is a game-changer for individuals with limited mobility, as it empowers them to use their devices with greater independence.

With these enhancements, Apple has expanded the functionality of eye tracking to make it even more accurate and responsive. The improved algorithms can now detect subtle eye movements with greater precision, resulting in a more reliable tracking experience. This means that users can enjoy a smoother and more natural interaction with their devices, saving them time and effort.

Another remarkable improvement is the reduced latency in eye tracking response. The latest enhancements dramatically decrease the time between the user’s eye movement and the corresponding action on the screen. This reduction in latency has a significant impact on usability, ensuring a near-instantaneous and seamless interaction between the user and their device.

The updated eye tracking technology also includes advanced calibration techniques. Calibrating eye tracking is a critical step to ensure accurate tracking of the user’s gaze. Apple’s calibration process has been refined to be quicker, easier, and more intuitive. Users can now calibrate their devices in a matter of minutes, making the setup process hassle-free and accessible to everyone.

Furthermore, Apple has expanded the range of supported languages for eye tracking. This means that more users around the world can take advantage of this innovative technology, regardless of their native language. It promotes inclusivity on a global scale, allowing individuals with various backgrounds and language preferences to benefit from the increased accessibility offered by eye tracking.

These eye tracking enhancements further solidify Apple’s commitment to accessibility, ensuring that their devices are accessible to a wide range of users. By continuously improving and refining their features, Apple has made significant strides in creating an inclusive digital environment for individuals with different abilities. The eye tracking enhancements, along with other accessibility features, reaffirm Apple’s position as a leader in accessibility technology.

AssistiveTouch for Apple Watch

Apple has recently announced a game-changing accessibility feature for the Apple Watch called AssistiveTouch. This innovative technology is designed to empower individuals with disabilities, making it easier for them to interact with their Apple Watch and enjoy its functionalities.

AssistiveTouch for Apple Watch utilizes advanced sensors and algorithms to detect subtle muscle movements and gestures, allowing users to perform various tasks without having to touch the screen. This hands-free experience opens up a world of possibilities for individuals with physical impairments or limited mobility.

With AssistiveTouch enabled, users can navigate the Apple Watch interface using just hand gestures. By simply clenching their fist or moving their hand in specific ways, they can access different features and functionalities. This includes interacting with notifications, activating the control center, launching apps, controlling volume, and even answering or declining incoming calls.

This groundbreaking technology also incorporates the watch’s gyroscope and accelerometer to detect subtle movements of the user’s wrist. By rotating their wrist in a clockwise or counterclockwise motion, users can scroll through menus, adjust settings, and navigate through their Apple Watch effortlessly.

Moreover, AssistiveTouch for Apple Watch incorporates voice commands through the watch’s built-in microphone. Users can simply speak commands such as “Send a message to mom” or “Set a timer for 10 minutes” to perform actions without any physical interaction.

AssistiveTouch for Apple Watch is a significant step towards inclusivity and accessibility, reaffirming Apple’s commitment to making their products accessible to everyone. It offers a new level of independence and freedom for individuals with disabilities, allowing them to fully enjoy the functionalities and features of the Apple Watch.

Through AssistiveTouch, Apple has once again demonstrated their dedication to creating technology that empowers and enhances the lives of individuals with disabilities. With this groundbreaking accessibility feature, the Apple Watch becomes even more inclusive and user-friendly, allowing individuals of all abilities to experience the convenience and functionality of this incredible device.

Conclusion

In conclusion, Apple’s announcement of new accessibility features, such as Live Captions and Door Detection, is a significant step towards making technology more inclusive for everyone. These features have the potential to empower individuals with hearing impairments and provide them with a better user experience. Live Captions, in particular, will revolutionize the way we consume digital content by providing real-time captions for videos, podcasts, and phone calls. Door Detection offers enhanced safety for visually impaired individuals, allowing them to navigate spaces more confidently. Apple’s consistent commitment to improving accessibility shows their dedication to building a more inclusive future for all users. We can expect these new features to raise the bar for accessibility standards across the industry and inspire other tech companies to prioritize inclusivity in their products. With each innovation, we move closer to a world where technology is truly accessible to everyone, regardless of their abilities.

FAQs

1. What are Live Captions?

Live Captions is a new accessibility feature introduced by Apple that automatically generates real-time captions for any audio or video content on your iPhone. It enables individuals with hearing impairments to have a seamless and inclusive experience when consuming media.

2. How does Live Captions work?

Live Captions leverages advanced machine learning algorithms to analyze the audio of any media being played on your iPhone. It then generates accurate captions that appear on the screen in real-time. Live Captions can be used with various forms of media, including videos, podcasts, voice messages, and even phone calls.

3. What is Door Detection?

Door Detection is a new feature designed to assist individuals with mobility impairments. It uses the iPhone’s camera and sensors to identify doors in the physical environment and provide haptic feedback when approaching or standing near a door. This helps users navigate spaces more independently, making everyday tasks easier and more convenient.

4. Can Door Detection be customized?

Yes, Door Detection can be customized to fit your specific needs. Apple has provided adjustable settings, allowing users to control the sensitivity of the door detection feature. This ensures that the haptic feedback is tailored to the individual’s preferences and requirements.

5. Are these new accessibility features available on all iPhone models?

These new accessibility features, including Live Captions and Door Detection, are available on select iPhone models running the latest version of iOS. To check if your iPhone is compatible, refer to the Apple website or visit the Accessibility settings on your device.