Python is a versatile programming language that is widely used for data analysis and manipulation. When working with large datasets, it is essential to ensure that your data is clean and free from errors or inconsistencies. Cleaning data involves the process of identifying and correcting errors, removing duplicates, handling missing values, and transforming data into a standard format.

In this article, we will explore various techniques and libraries available in Python to clean your data effectively. From handling missing values to dealing with outliers, we will cover the essential steps to ensure your data is accurate and reliable. Whether you are a novice or an experienced Python user, this guide will provide you with the knowledge and skills needed to clean your data with confidence.

Inside This Article

- Understanding Data Cleaning

- Importing Libraries and Loading Data

- Handling Missing Data

- Removing Duplicates

- Handling Outliers

- Handling Inconsistent Data

- Dealing with Data Formatting Issues

- Handling Categorical Data

- Handling Numerical Data

- Conclusion

- FAQs

Understanding Data Cleaning

Data cleaning is a crucial step in any data analysis or machine learning project. It involves identifying and rectifying errors, inconsistencies, and missing data in a dataset. By cleaning the data, you can ensure the accuracy and reliability of your analysis, leading to more meaningful insights and better decision-making.

One of the key aspects of data cleaning is identifying and handling missing data. Missing data can occur due to various reasons, such as data entry errors, incomplete records, or data not being collected for certain observations. Failing to address missing data can skew your results and affect the validity of your analysis.

In addition to handling missing data, data cleaning also involves removing duplicate records. Duplicates can occur when multiple entries of the same data exist in a dataset. These duplicates can lead to biased results and unnecessary duplication of efforts during analysis.

Another important aspect of data cleaning is dealing with outliers. Outliers are extreme values that deviate significantly from the rest of the data. They can distort statistical measures and affect the performance of machine learning algorithms. Identifying and handling outliers is essential to ensure the accuracy and robustness of your analysis.

Inconsistent data is yet another challenge that data cleaning addresses. Inconsistencies can arise from different data sources, formatting issues, or human errors. Standardizing the format and resolving inconsistencies in the data is crucial to maintain consistency and reliability in your analysis.

Data cleaning also involves handling data formatting issues. This includes correcting data types, converting units of measurement, or reformatting data to meet the requirements of a specific analysis or model. Proper data formatting ensures that the data is in a consistent and usable format.

When working with categorical data, data cleaning often involves handling missing values, inconsistent labels, or irrelevant categories. By properly cleaning and encoding categorical data, you can ensure that it is ready for analysis and modeling.

Similarly, numerical data may require cleaning to handle missing values, outliers, or incorrect numerical formats. Cleaning numerical data involves methods such as imputation, transformation, or normalization to ensure the accuracy and reliability of your analysis.

Importing Libraries and Loading Data

When working with data in Python, it is essential to import the necessary libraries to perform various data manipulation tasks. Libraries such as pandas, numpy, and matplotlib are commonly used for data analysis and visualization.

To begin, you can import pandas with the following line of code:

import pandas as pd

This library provides powerful data structures and data analysis tools, making it easier to handle and manipulate data effectively.

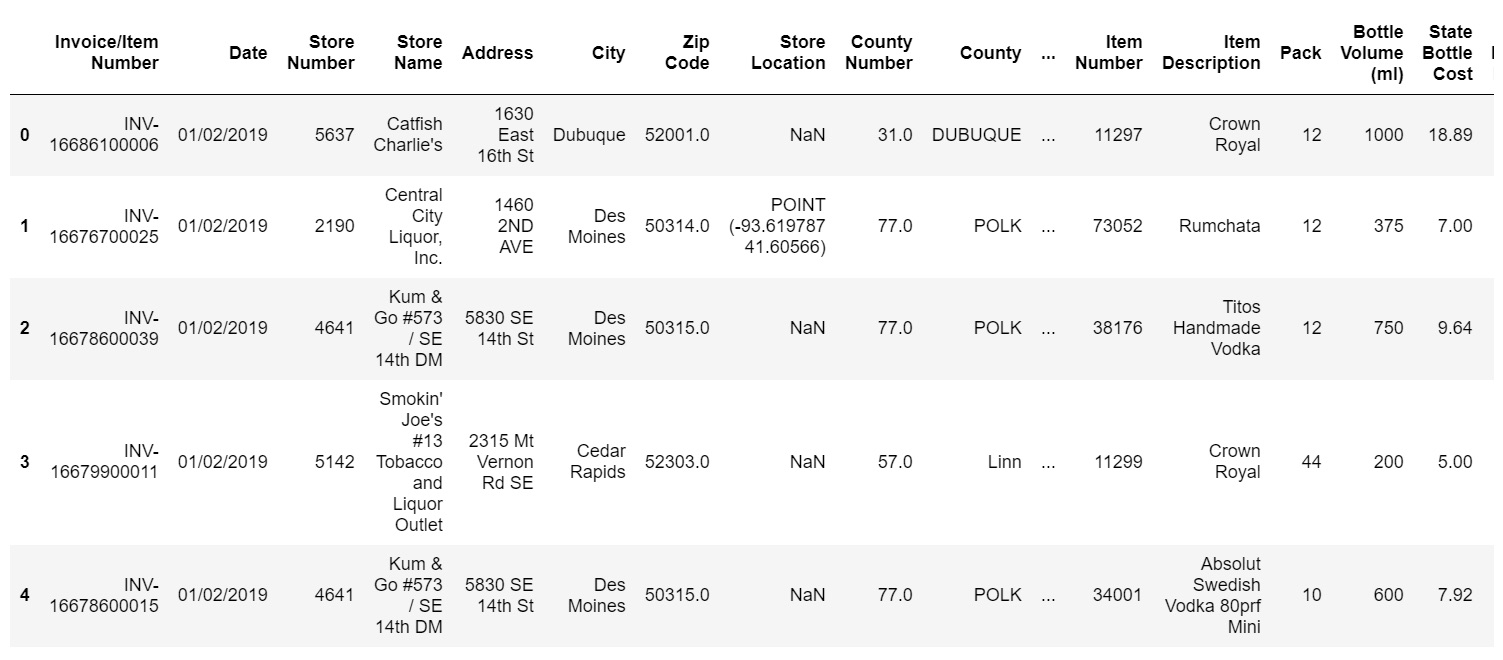

After importing pandas, you can load your data into a pandas DataFrame. A DataFrame is a two-dimensional labeled data structure with columns of potentially different data types. You can load data from various sources like CSV, Excel, databases, and more.

To load data from a CSV file, you can use the `read_csv()` function provided by pandas. Here’s an example:

df = pd.read_csv(‘data.csv’)

Make sure to replace `’data.csv’` with the path to your actual data file or URL.

If you have an Excel file, you can use the `read_excel()` function instead:

df = pd.read_excel(‘data.xlsx’)

Again, replace `’data.xlsx’` with the correct file path or URL.

When loading data, pandas automatically assigns column names based on the headers in the file. However, you can also specify column names explicitly by passing a list of column names using the `names` parameter:

df = pd.read_csv(‘data.csv’, names=[‘col1’, ‘col2’, ‘col3’])

This is useful when your data file doesn’t include headers, or you want to provide custom column names.

Loading data into a DataFrame allows you to perform various data cleaning and analysis tasks easily. You can inspect the data using methods like `head()` to display the first few rows, `info()` to get information about the DataFrame, or use various indexing and slicing techniques for data manipulation.

Now that you have imported the necessary libraries and loaded your data, you are ready to begin cleaning and preparing your data for analysis.

Handling Missing Data

Missing data is a common issue in datasets and can have a significant impact on the accuracy and reliability of our analysis. Fortunately, Python provides various methods to handle missing data effectively. Let’s explore some of the techniques:

1. Dropping Missing Values: One approach to handling missing data is to simply drop the rows or columns that contain missing values. The dropna() function in Python allows us to do this easily. However, this approach should be used with caution, as it may result in a loss of valuable information.

2. Imputation: Another way to handle missing data is through imputation, which involves filling in the missing values with estimated or predicted values. Python provides several methods for imputation, including mean, median, mode, and creating predictive models to impute the missing values.

3. Categorical Data: When dealing with missing values in categorical data, a common approach is to add a new category to represent the missing values. This allows us to retain the information while avoiding bias in the analysis.

4. Time-Series Data: In time-series data, missing values can be handled by interpolation, where the missing values are estimated based on the values before and after the missing point. Python provides functions like interpolate() to perform this operation.

5. Advanced Techniques: For more complex scenarios, such as when the missing data is related to multiple variables or when the missing data pattern needs to be considered, advanced techniques like multiple imputation and using machine learning algorithms can be employed.

By carefully handling missing data in our datasets, we can ensure that our analysis and models are more accurate and reliable. It’s important to choose the appropriate method based on the nature of the missing data and the specific requirements of our analysis.

Removing Duplicates

Duplicate data can cause issues in data analysis and machine learning models. It is crucial to identify and remove duplicates from your dataset to maintain data integrity and accuracy. Python offers several methods to handle duplicate data effectively.

Method 1: Using the drop_duplicates() function:

The drop_duplicates() function is a convenient way to remove duplicates from a DataFrame. By default, it considers all columns when identifying duplicates. Here’s an example:

python

df.drop_duplicates()

This function removes all rows that have identical values across all columns, keeping only the first occurrence. You can also specify a subset of columns to consider for duplicate removal, like this:

python

df.drop_duplicates(subset=[‘column_name’])

Method 2: Using the duplicated() function:

The duplicated() function returns a boolean Series indicating whether each row is a duplicate or not. You can use this information to filter out the duplicate rows from your DataFrame. Here’s an example:

python

df[df.duplicated()]

This code will return a DataFrame containing only the duplicate rows. You can drop these duplicate rows using the drop() function, like this:

python

df.drop(df[df.duplicated()].index)

Method 3: Using the subset parameter:

You can also use the subset parameter in the drop_duplicates() function to specify a subset of columns for duplicate detection. This is useful when you want to consider only specific columns for duplicate removal. Here’s an example:

python

df.drop_duplicates(subset=[‘column1’, ‘column2’])

This code removes duplicates only if the values in both ‘column1’ and ‘column2’ are identical.

Method 4: Using the groupby() function:

The groupby() function groups the data by one or more columns and allows you to perform aggregation or transformations on the grouped data. By applying the groupby() function followed by the drop_duplicates() function, you can remove duplicates based on specific columns. Here’s an example:

python

df.groupby([‘column1’]).apply(lambda x: x.drop_duplicates())

This code groups the data by ‘column1’ and applies the drop_duplicates() function to each group, effectively removing duplicates in each group.

Remember to store the cleaned data in a new DataFrame or overwrite the original DataFrame for further analysis or processing. Cleaning duplicates ensures accurate and reliable data for your analysis, improving the quality of your results.

Handling Outliers

In the field of data analysis, outliers refer to data points that deviate significantly from other observations in a dataset. Outliers can occur due to various reasons such as measurement errors, data entry mistakes, or rare events. It is important to identify and handle outliers properly as they can have a significant impact on the statistical analysis and modeling process.

Here are some techniques for handling outliers in Python:

- Identifying Outliers: The first step in handling outliers is to identify them in the dataset. This can be done by visually inspecting the data using scatter plots, box plots, or histograms. Additionally, statistical methods such as the Z-score or the interquartile range (IQR) can be used to detect outliers.

- Removing Outliers: One approach to handling outliers is to remove them from the dataset. However, caution should be exercised when removing outliers as it can potentially lead to the loss of important information. Removing outliers should only be done if there is a valid reason and it does not significantly affect the overall analysis.

- Transforming Data: Another method to handle outliers is to transform the data. This can be done by applying mathematical functions such as logarithmic or power transformations to normalize the distribution of the data. Transforming the data can help make the outliers less influential in the analysis.

- Imputing Outliers: In some cases, it may be necessary to impute or replace the outliers with more appropriate values. This can be done by using statistical techniques such as mean imputation, median imputation, or regression imputation. Imputing outliers can help maintain the integrity of the dataset while addressing the impact of the outliers.

- Using Robust Statistics: Robust statistical methods are designed to be more resistant to the influence of outliers. Techniques such as the median absolute deviation (MAD) or robust regression can provide more reliable estimates and predictions in the presence of outliers.

It is important to consider the context of the data and the specific analysis being performed when deciding on the appropriate method for handling outliers. Different approaches may be more suitable depending on the nature of the dataset and the goals of the analysis.

By effectively handling outliers, we can ensure that our data analysis is accurate and reliable, leading to more meaningful insights and decision-making.

Handling Inconsistent Data

One of the challenges in data cleaning is dealing with inconsistent data. Inconsistent data refers to data that does not conform to a specific format or pattern. This can include misspelled words, variations in capitalization, inconsistent date formats, or different representations of the same entity.

To handle inconsistent data, there are several techniques that can be employed:

- Data Standardization: One approach is to standardize the data by applying rules to bring it to a consistent format. For example, converting all text to lowercase, fixing misspellings using spell-checking algorithms, or converting dates into a standardized format.

- String Matching: Another technique is to perform string matching or fuzzy matching to identify and correct inconsistencies. This involves comparing strings and finding similarities based on predefined algorithms. This can be particularly useful in identifying duplicate entries or variations of the same entity.

- Regular Expressions: Regular expressions can be used to identify and extract patterns or specific formats within the data. This allows for targeted cleaning and manipulation of inconsistent data based on defined patterns.

- Data Validation: Implementing data validation checks can help identify inconsistencies by comparing the data against predefined rules or reference data. This can be done through automated validation scripts or manual verification processes.

- Domain Knowledge: Having a good understanding of the domain and the context of the data can also help in identifying inconsistencies. This can involve domain-specific knowledge of formats, rules, or patterns that need to be applied to the data.

By employing these techniques, it is possible to effectively handle inconsistent data and ensure a clean and standardized dataset for analysis and modeling purposes.

Dealing with Data Formatting Issues

Data formatting issues can be a common challenge when working with datasets in Python. Inconsistent data formats can lead to errors in data analysis and hinder the overall quality of the analysis. Fortunately, there are several techniques and libraries available in Python to tackle these formatting issues.

One common formatting issue is when dates are stored in different formats within a dataset. For example, some dates may be formatted as “dd/mm/yyyy” while others are in the “mm/dd/yyyy” format. To address this, Python provides the datetime library which allows you to convert dates between different formats using the strftime() and strptime() functions.

Another formatting issue arises when numerical values are stored as strings instead of actual numbers. This can happen when data is imported from external sources or when the dataset contains missing or inconsistent values. In such cases, you can use the astype() method in pandas to convert the data type of a column to numeric. This will allow you to perform mathematical operations and statistical analysis on the numerical data.

Furthermore, it is common to encounter numeric values with incorrect decimal separators or thousands separators. For example, a dataset may have numbers represented with a comma (“,”) as a decimal separator instead of a dot (“.”). In such cases, you can use the replace() method in pandas to replace the incorrect separator and convert the string to a numeric value.

Handling string formatting issues is also essential in data cleaning. It is not uncommon to come across inconsistent capitalization, leading or trailing spaces, or extra characters in string columns. Python provides various string manipulation methods, such as lower(), upper(), strip(), and replace(), to address these issues. These methods can be applied to the columns containing the strings to ensure consistency and accuracy in data analysis.

Another common formatting issue is dealing with categorical variables stored as integers. In some datasets, categorical variables may be represented by numeric codes, which can make the analysis challenging. To handle this, you can create a mapping dictionary to associate each code with its respective category. Then, using the replace() method in pandas, you can replace the codes with their corresponding category names.

In a nutshell, data formatting issues can pose significant challenges during data cleaning and analysis. However, with the right techniques and libraries in Python, these issues can be effectively addressed. By standardizing date formats, converting strings to numeric values, handling incorrect separators, and managing categorical variables, you can ensure accurate and reliable data analysis in your Python projects.

Handling Categorical Data

When working with data, it is common to come across categorical variables. These variables represent characteristics or attributes that can take on a limited set of values, such as “red,” “green,” or “blue.” Categorical data can pose unique challenges in data analysis and machine learning tasks. Therefore, it is important to know how to handle them effectively.

There are several techniques you can use to handle categorical data in Python. Let’s explore some of the most common approaches:

1. One-Hot Encoding: One-hot encoding is a popular technique used to convert categorical variables into a binary vector representation. Each category is transformed into a separate binary feature, where a value of 1 indicates the presence of that category and 0 denotes its absence. This method is widely used for categorical variables that don’t exhibit any inherent order.

2. Label Encoding: Label encoding is another technique used to convert categorical variables into numerical values. Each category is assigned a unique numerical label, which can be useful in algorithms that require numerical input. However, it is important to note that label encoding may inadvertently introduce an arbitrary order to the categories, which can lead to incorrect interpretations.

3. Ordinal Encoding: Ordinal encoding is similar to label encoding, but it takes into account the ordinal relationship between categories. This technique assigns numerical labels in a way that preserves the order of the categories. For example, in a variable like “low,” “medium,” and “high,” the labels assigned would reflect the order of these values. Ordinal encoding can be helpful when dealing with categorical variables that have an inherent order.

4. Binary Encoding: Binary encoding is a technique that combines aspects of one-hot encoding and label encoding. It represents each category as a binary code, where each bit represents the presence or absence of a category. This encoding method reduces the dimensionality of the feature space and is particularly useful when dealing with categorical variables with a large number of categories.

5. Count Encoding: Count encoding replaces each category with the count of its occurrences in the dataset. This technique can be useful when the frequency of a category is expected to have an impact on the target variable. It effectively captures the relationship between category frequency and the target without introducing new dimensions to the feature space.

6. Target Encoding: Target encoding is a technique that replaces each category with the mean value of the target variable for that category. This encoding method can be effective in capturing the relationship between the categories and the target variable. However, it is important to be cautious of overfitting when using target encoding.

These techniques provide various ways to handle categorical data in Python, allowing you to choose the most appropriate method based on your specific dataset and analysis needs. By correctly handling categorical data, you can ensure that your analyses and machine learning models are accurate and meaningful.

Handling Numerical Data

When working with data, it is common to encounter numerical features that require cleaning or preprocessing. Handling numerical data effectively is crucial for accurate analysis and modeling. Here are some important techniques to consider when dealing with numerical data in Python:

1. Removing Outliers: Outliers are data points that deviate significantly from the average value of the dataset. These can introduce bias and have a negative impact on the analysis. Identify outliers by examining the distribution of the numerical feature and remove them using appropriate statistical techniques or domain knowledge.

2. Imputing Missing Values: Missing values in numerical data can pose a challenge. Before proceeding with any analysis, it is important to handle missing values appropriately. You can choose to impute them using techniques such as mean, median, or regression-based imputation.

3. Scaling and Normalization: When working with numerical data, it is essential to scale or normalize the features to a common range. This helps ensure that all variables contribute equally to the analysis. Scaling techniques like Standardization or Min-Max scaling can be used depending on the requirements of your analysis.

4. Handling Skewed Data: Skewed data can negatively affect the performance of models by violating assumptions such as normality. Use techniques like log transformation or box-cox transformation to address skewness and make the data more suitable for analysis.

5. Binning: Binning is a technique used to convert continuous numerical variables into discrete categories. This can help simplify the analysis or capture nonlinear relationships. Binning can be done manually or using automated algorithms like equal width or equal frequency.

6. Feature Engineering: Creating new features from existing numerical data can often improve model performance. These can be derived by applying mathematical operations, aggregations, or interactions between variables. Feature engineering allows the model to capture more complex relationships and patterns in the data.

7. Handling Multicollinearity: Multicollinearity occurs when there is a high correlation between two or more numerical variables. This can impact the model’s stability and interpretability. Use techniques like correlation analysis and variance inflation factor (VIF) to detect and handle multicollinearity.

By applying these techniques, you can ensure that your numerical data is clean, standardized, and ready for analysis or modeling. Remember that the specific approach may vary depending on the nature of the data and the requirements of your analysis.

Conclusion

In conclusion, cleaning data in Python is an essential step in any data analysis or machine learning project. By using the pandas library and its powerful functions, we can effectively handle missing values, outliers, and inconsistent data. We learned how to detect and remove duplicate records, standardize data formats, and correct invalid values.

Moreover, we explored techniques to handle missing data, such as imputation or deletion. By using data visualization, we can gain insights into the quality of our data and identify patterns or discrepancies. Cleaning data is crucial for ensuring the accuracy and reliability of our analyses, and Python provides a robust set of tools to achieve this.

Remember, data cleaning is an iterative process, and there isn’t a one-size-fits-all solution. It requires careful observation, domain knowledge, and understanding of the specific dataset. With the techniques discussed in this article, you are now empowered to clean your data effectively and prepare it for further analysis or modeling.

FAQs

Q: Why is cleaning data important in Python?

Cleaning data in Python is crucial because raw data often contains errors, inconsistencies, missing values, and other issues that can hinder data analysis and modeling. Cleaning data ensures data quality, accuracy, and reliability, leading to more accurate and meaningful insights and results.

Q: What are some common data cleaning tasks in Python?

Some common data cleaning tasks in Python include handling missing values, removing duplicates, correcting inconsistencies, standardizing data formats, transforming data types, and dealing with outliers. Additionally, data cleaning may involve parsing and extracting relevant information from unstructured or messy data sources.

Q: How can I handle missing values in Python?

There are various ways to handle missing values in Python. You can choose to remove rows or columns with missing values using the dropna() function or fill in missing values with a specific value or using methods like mean, median, or mode. Pandas library provides several convenient functions for missing value treatment, such as fillna() and interpolate().

Q: What is the importance of removing duplicates in data cleaning?

Removing duplicates is essential in data cleaning because duplicated records can skew analysis results, lead to biased insights, and waste computational resources. By eliminating duplicates, you ensure that each record is unique, preventing any unnecessary duplication of information and maintaining the integrity of the dataset.

Q: How can I handle inconsistent data in Python?

Handling inconsistent data in Python involves identifying and correcting inconsistencies such as different spellings, capitalization variations, or different representations of the same values. You can perform string manipulation operations, use regular expressions, or apply data cleaning techniques like data standardization to ensure consistency across the dataset.