Are you looking for a quick and efficient way to extract and analyze website data? Look no further! In this article, we will explore how to pull website data into Excel, providing you with a step-by-step guide to make the process seamless and hassle-free. Whether you need to track website performance, analyze competitors’ data, or gather information for research purposes, being able to extract website data into Excel can save you significant time and effort. With the right tools and techniques, you can effortlessly import website data into Excel and unlock valuable insights. So, let’s dive in and discover the methods that will empower you to easily pull website data into Excel!

Inside This Article

- Overview

- Step 1: Installing the required software

- Step 2: Identifying the data to be extracted

- Step 3: Accessing the website data

- Step 4: Extracting data into Excel

- Step 5: Cleaning and formatting the extracted data

- Step 6: Automating the Data Extraction Process

- Step 7: Updating the extracted data

- Step 8: Troubleshooting common issues

- Step 9: Advanced techniques for website data extraction

- Step 10: Best practices for pulling website data into Excel

- Conclusion

- FAQs

Overview

When it comes to pulling website data into Excel, the possibilities are endless. With the right tools and techniques, you can extract valuable data from websites and import it directly into Excel, saving you time and effort. Whether you need to gather data for analysis, reporting, or any other purpose, this guide will walk you through the steps to efficiently pull website data into Excel.

The process involves installing the necessary software, identifying the data you want to extract, accessing the website, and extracting the data into Excel. You’ll also learn how to clean and format the data, automate the extraction process, troubleshoot common issues, and even explore advanced techniques.

By the end of this article, you’ll have a solid understanding of how to gather website data and leverage Excel’s power to analyze and manipulate it. So, let’s dive in and discover how you can pull website data into Excel and unlock a world of possibilities!

Step 1: Installing the required software

Before you can start pulling website data into Excel, you’ll need to install a few necessary software tools. These tools will enable you to extract data from websites and import it directly into your Excel spreadsheets. Here are the key software components you’ll need to get started:

1. Web Scraping Tool: A web scraping tool is essential for extracting data from websites. One popular and powerful tool is the Now You Know web scraper. It allows you to specify the data you want to extract and provides various options for organizing and formatting the extracted data.

2. Excel Add-ins: Install the necessary Excel add-ins to facilitate the data extraction process. These add-ins provide additional functionality and integration with the web scraping tool you choose. Make sure to follow the installation instructions provided by the add-in provider to ensure seamless integration with Excel.

3. Web Browser Extensions: Some web scraping tools require browser extensions to enhance their functionality. These extensions allow you to interact with the web scraping tool directly from your browser. Install the required browser extensions for better control and ease of use.

4. APIs: If you plan to extract data from websites that provide APIs (Application Programming Interfaces), you may need to install and configure the necessary APIs in order to access and extract the data. Check the documentation of the websites you want to scrape for any API-related instructions.

Once you have successfully installed the required software tools, you can proceed to the next step of identifying the specific data you want to extract from websites.

Step 2: Identifying the data to be extracted

Before you can pull website data into Excel, you need to identify the specific data that you want to extract. This crucial step helps you determine what information is relevant to your needs and streamlines the extraction process.

Here are some key considerations when identifying the data to be extracted:

- Define your objective: Clearly articulate the purpose of extracting data from the website. Are you looking for product prices, customer reviews, or market trends? Having a clear objective helps you narrow down what data you need.

- Research the website structure: Familiarize yourself with the layout and structure of the website you want to extract data from. Take note of the different sections, categories, and page hierarchy that contain the desired data.

- Inspect the HTML source code: Use the developer tools in your web browser to view the website’s HTML source code. This allows you to analyze the elements and tags that hold the data you want. Look for specific identifiers like class names or IDs that can help you target the data accurately.

- Consider data dependencies: Determine if the data you want to extract relies on other data present on the website. For example, if you need product information, you may also need to extract details from linked pages such as product specifications or user ratings.

- Consider data formats: Take note of the data formats used on the website. Are the values in text, numbers, dates, or images? Understanding the data formats helps you plan for the appropriate extraction methods and data transformations in Excel.

By carefully identifying the data you want to extract, you can streamline the extraction process and ensure that you gather the information you require accurately and efficiently.

Step 3: Accessing the website data

Once you have identified the data you wish to extract from a website, the next step is to access that data. There are several ways to accomplish this, depending on the structure and accessibility of the website. Here are a few methods you can use:

1. Manual extraction: The simplest method is to manually navigate to the webpage where the data is located and copy-paste it into Excel. This approach works well for small datasets or when the information is readily accessible.

2. Using web scraping tools: Web scraping software or tools are specifically designed to extract data from websites. These tools allow you to specify the website URL, select the data you want to extract, and automatically retrieve it into a structured format, such as Excel. Some popular web scraping tools include BeautifulSoup, Scrapy, and Octoparse.

3. API integration: Many websites provide Application Programming Interfaces (APIs) that allow developers to access their data programmatically. By integrating with the website’s API, you can retrieve the required data directly into Excel without the need for manual extraction or scraping. This method is particularly useful for accessing real-time or frequently updated data.

4. Data export/import: Some websites offer the option to export data in a downloadable file format, such as CSV or Excel. You can take advantage of this feature by exporting the data and then importing it into Excel. This method is convenient when the website provides a bulk download option or when the data is structured in a way that is easily importable into Excel.

5. Database connections: If the website’s data is stored in a database, you can establish a connection to the database from Excel using appropriate drivers or connectors. This allows you to query and retrieve the data directly into Excel, providing real-time or on-demand access to the website’s data.

When accessing website data, it’s important to understand any legal or ethical considerations that may apply. Make sure to review the website’s terms of service and ensure that your data extraction methods comply with any restrictions or usage guidelines imposed by the website owner.

By following these methods, you can gain access to the data you need from a website and move on to the next step of extracting and manipulating the data in Excel.

Step 4: Extracting data into Excel

Now that you have identified the data you want to extract from the website, it’s time to dive into the process of extracting that data into Excel. This step will allow you to gather the information you need and manipulate it further for analysis or reporting purposes.

There are several methods you can use to extract data into Excel from a website. Let’s explore a few of the most common and effective approaches:

1. Manual Copy and Paste: The simplest method is to manually copy the data from the website and paste it directly into an Excel spreadsheet. This method works well for small amounts of data or when the website does not provide an option for exporting data in a structured format.

2. Importing from Web Data Source: Many versions of Excel offer the option to import data directly from a web data source. This feature allows you to specify the URL of the website and select the specific data elements you want to import. Excel will fetch the data and populate it into your spreadsheet automatically.

3. Using Web Scraping Tools: For more complex data extraction requirements, you can turn to web scraping tools. These tools allow you to create custom scripts or use pre-built templates to extract data from websites. The extracted data can then be saved directly into an Excel file.

4. Utilizing APIs: Some websites offer APIs (Application Programming Interfaces) that allow you to retrieve data programmatically. By accessing the API endpoints, you can retrieve the required data and integrate it into Excel using a programming language such as Python or VBA.

It’s important to note that the method you choose will depend on the complexity of the data you want to extract, your technical skills, and the specific capabilities of the website you are working with.

Once you have successfully extracted the data into Excel, you can further manipulate, analyze, and visualize the data to gain insights and make informed decisions.

In the next step, we will focus on cleaning and formatting the extracted data to ensure its accuracy and usability.

Step 5: Cleaning and formatting the extracted data

Once you have successfully extracted the data from the website and imported it into Excel, it’s time to clean and format the data to make it more organized and presentable. This step is crucial to ensure that the data is accurate and manageable for further analysis or reporting.

The first task in cleaning the extracted data is removing any unnecessary characters, symbols, or formatting artifacts. This includes deleting extra spaces, removing special characters, and getting rid of unwanted data components such as headers, footers, or navigation menus.

After removing the unnecessary elements, it’s important to check for any missing or incomplete data. You can use Excel’s functions or formulas to identify and fill in the gaps. This ensures that your data is complete and consistent, making it more reliable for analysis.

Next, you might need to rearrange the data to match your desired format. This can involve reordering columns, merging cells, or splitting data into separate columns. These actions can be performed using Excel’s built-in functions or by manually manipulating the data.

Formatting the extracted data is another important step. This includes applying consistent fonts, colors, and styles to improve the visual appeal of the data. Additionally, you can use conditional formatting to highlight specific data points or apply data validation rules to ensure data integrity.

During the cleaning and formatting process, it’s also a good idea to check for and eliminate any duplicates or errors in the data. Excel provides tools such as Remove Duplicates and Error Checking that can help you detect and resolve these issues efficiently.

Once you have cleaned and formatted the extracted data, you can save it as a separate file or overwrite the original file. Remember to adopt proper file naming conventions and directory structures to easily locate the file in the future.

By following these steps, you can convert the raw extracted data into a clean, well-organized, and visually appealing format in Excel. This will make it easier for you to analyze, report, or share the data with others, maximizing the value and usability of the extracted website data.

Step 6: Automating the Data Extraction Process

Automating the data extraction process in Excel can save you time and effort, especially if you need to regularly update the extracted data. With automation, you can set up Excel to automatically retrieve the latest data from a website at specified intervals. This ensures that your Excel file always contains up-to-date information without manual intervention.

Here are some steps to automate the data extraction process in Excel:

- 1. Set up a refresh schedule: In Excel, go to the “Data” tab and select “Connections”. Choose the connection that retrieves the website data and click on “Properties”. In the “Connection Properties” window, navigate to the “Refresh Control” section and select the “Refresh every” option. Specify the desired refresh interval, such as every hour, day, or week. Click “OK” to save the changes.

- 2. Configure data refresh settings: Under the “Connections” tab, select the connection again and click on “Properties”. In the “Usage” tab, choose how you want Excel to handle data refresh. You can opt to refresh the data when opening the file, refresh in the background, or prompt for data refresh on manual request. Select the appropriate option and click “OK”.

- 3. Protect your data source: If the website data source requires authentication or requires you to log in, ensure that you have saved the necessary credentials within the connection properties. This will enable automatic login and retrieval of data during the refresh process.

- 4. Save and close: Once you have configured the refresh settings and data source credentials, save the Excel file and close it. The data extraction process will now be automated according to the defined refresh schedule.

By automating the data extraction process, you can focus on analyzing and utilizing the extracted data, rather than spending time manually updating it. This feature is especially useful when dealing with time-sensitive information or when you need to share the data with others who depend on real-time updates.

Remember to review and test the automated data extraction periodically to ensure that it is functioning correctly. Check for any changes in the website’s structure or data format that may require adjustments to your automation settings.

With Excel’s automation capabilities, you can streamline your data extraction process and free up valuable time for other tasks. Take advantage of this feature to make your workflow more efficient and keep your Excel files updated with the latest website data.

Step 7: Updating the extracted data

Once you have successfully extracted the desired data from a website into Excel, it’s important to keep that data updated to ensure its accuracy and relevance. Websites often have dynamic content that changes frequently, so it’s crucial to have a system in place for updating your extracted data.

The process of updating the extracted data can be done manually or automated, depending on your requirements and the frequency of data changes on the website. Let’s explore both methods:

1. Manual updates:

If the website data you are extracting doesn’t change frequently, you can manually update the extracted data as needed. This involves revisiting the website, re-extracting the data, and replacing the outdated data in your Excel sheet.

To manually update the data, you can follow the same steps you used for the initial extraction. However, it’s important to keep track of the changes you make and maintain proper documentation so that you can easily identify and update the specific data points that have been modified.

2. Automated updates:

If the website data you are extracting undergoes frequent changes, it’s more efficient to automate the updating process. Automation allows you to save time and effort by automatically pulling the updated data into Excel without manual intervention.

To automate the updating process, you can make use of various techniques such as web scraping tools, macros, or scripts. These tools can be programmed to periodically revisit the website and extract the updated data at predefined intervals or trigger events. This ensures that your Excel sheet remains up to date with the most current information available on the website.

When using automation, it’s essential to be mindful of any legal and ethical considerations related to web scraping or data extraction. Make sure to review the website’s terms and conditions and respect any data usage policies or restrictions.

Additionally, it’s crucial to regularly test and validate the automated update process to ensure its reliability. Monitor the extracted data regularly to detect any issues or discrepancies and make any necessary adjustments to your automation setup.

Whether you choose manual updates or automated solutions, the key is to establish a consistent updating schedule to keep the extracted data accurate and reliable. Set reminders or create a calendar to remind yourself or your team to update the data at regular intervals.

Remember, maintaining updated data not only ensures the accuracy of your analysis and reporting but also helps you make timely and informed decisions based on the most current information.

Step 8: Troubleshooting common issues

While pulling website data into Excel can be a powerful and efficient process, it may encounter some common issues along the way. In this step, we will address these issues and provide troubleshooting techniques to help you overcome them.

1. Connection errors: One of the most common issues is encountering connection errors while accessing the website data. This can happen due to various reasons such as unstable internet connection or server downtime. To troubleshoot this issue, check your internet connection, try accessing the website at a different time, or contact the website’s administrator to ensure that the server is up and running.

2. Inconsistent data structure: Websites often have dynamic content with varying data structures. This can create challenges when extracting data into Excel, especially if the structure changes frequently. To troubleshoot this issue, consider using web scraping tools or scripts that are capable of adapting to different data structures. Additionally, verify whether the website offers an API that provides a consistent data feed, as this can be a more reliable and structured approach.

3. Authentication issues: Some websites require user authentication, such as login credentials, before allowing access to certain data. If you encounter authentication issues while pulling data into Excel, verify that you have provided the correct credentials and ensure that your account has the necessary permissions. Additionally, check if the website offers an API that supports authentication and allows you to access the desired data securely.

4. Data overload: Pulling a large amount of data from a website into Excel can sometimes lead to performance issues or even crashes. To troubleshoot this issue, consider extracting data in smaller batches or applying filters to retrieve only the necessary information. This can help reduce the load on Excel and improve overall performance.

5. Data format inconsistencies: Websites may present data in different formats, such as dates, numbers, or text, which can cause inconsistencies when importing into Excel. If you encounter formatting issues, explore Excel’s data formatting options to ensure that the extracted data is presented correctly. Additionally, consider using data cleansing techniques like text-to-columns or custom formulas to standardize the format of the imported data.

6. Website changes: Websites frequently undergo updates and changes that can affect the data extraction process. When encountering issues due to website changes, review the website’s structure to identify any modifications. If necessary, adjust your data extraction method or scripts accordingly to adapt to the updated website layout.

By troubleshooting these common issues, you can ensure a smoother and more efficient process of pulling website data into Excel. Remember to stay vigilant and adaptable, as websites and data structures can evolve over time.

Step 9: Advanced techniques for website data extraction

When it comes to extracting website data into Excel, there are various advanced techniques that can give you more control and flexibility. These techniques allow you to extract specific data points, handle complex website structures, and streamline the extraction process. In this step, we will explore some of these advanced techniques.

1. Using regular expressions: Regular expressions are powerful patterns used to match and extract specific data from web pages. By using regular expressions, you can precisely define the data you want to extract, even if it is embedded in complex HTML tags or structures.

2. XPath extraction: XPath is a language used to navigate XML and HTML documents. It provides a systematic way to locate elements and attributes within the document structure. By using XPath, you can target specific elements on a web page and extract their data.

3. Handling dynamic content: Many websites use dynamic content that is loaded using JavaScript or AJAX. This content may not be immediately available when the page is loaded. To extract this dynamic content, you can use techniques like browser automation or API integration to simulate user interaction and retrieve the data.

4. Dealing with pagination: Some websites split their data across multiple pages, with pagination controls to navigate through them. To extract data from all pages, you can automate the process of clicking through the pages and extracting the data incrementally.

5. Handling login and authentication: If the website requires user authentication or login, you can use techniques like session management or API integration to handle the authentication process and extract data from restricted areas of the website.

6. Advanced data manipulation: Once the data is extracted, Excel offers a wide range of data manipulation features. You can use formulas, macros, and pivot tables to further analyze and transform the extracted data to meet your specific needs.

7. Performance optimization: When dealing with large datasets or complex extraction tasks, optimizing the extraction process is crucial. Techniques like parallel processing, caching, and using efficient algorithms can significantly improve the performance of your data extraction workflow.

By mastering these advanced techniques, you can elevate your website data extraction skills and overcome more complex extraction challenges. Experimentation, practice, and continuous learning are key to becoming proficient in these techniques.

Step 10: Best practices for pulling website data into Excel

When it comes to pulling website data into Excel, there are certain best practices that you should follow to ensure a smooth and efficient process. These practices are designed to help you extract the data accurately and efficiently, as well as maintain the integrity and quality of the extracted information. Here are some key best practices to keep in mind:

1. Identify the specific data you need: Before you start pulling website data into Excel, clearly define the specific information you want to extract. Identify the relevant fields, tables, or sections on the website that contain the data you need.

2. Automate the process: If you find yourself needing to extract data regularly from the same website, consider automating the process using tools or scripts. This will save you time and effort in the long run.

3. Understand the website structure: Familiarize yourself with the structure of the website you’re pulling data from. Understand how the data is organized, whether it’s in HTML tables, lists, or other formats. This knowledge will help you navigate the website and extract the data more effectively.

4. Use web scraping tools: There are various web scraping tools available that can assist you in extracting data from websites and importing it into Excel. These tools provide features like automatic data extraction, data cleaning, and formatting options.

5. Be mindful of website terms and conditions: Before pulling data from a website, make sure you’re familiar with the website’s terms and conditions. Some websites may have restrictions on data extraction, so it’s important to comply with their guidelines or seek permission if necessary.

6. Handle pagination and navigation: If the data you’re extracting spans multiple pages or requires navigation through different sections of the website, make sure your extraction process can handle this. Ensure that you capture all the relevant data by properly configuring your scraping tool or script.

7. Verify the accuracy of the extracted data: Once you’ve imported the website data into Excel, double-check the accuracy of the extracted information. Look for any discrepancies or formatting issues and make the necessary adjustments to ensure the data is correct.

8. Regularly update the extracted data: If the website data you’re pulling into Excel is frequently updated, establish a regular update schedule to keep your Excel file up to date. Automate the update process if possible, or manually update the data at regular intervals.

9. Handle website changes: Websites may undergo changes, such as layout modifications or URL structure updates. Monitor the website for any changes that may impact your data extraction process and be prepared to adjust your scraping methods accordingly.

10. Keep an eye on performance: If you’re dealing with a large volume of data or complex extraction processes, monitor the performance of your scraping tool or script. Optimize your code or make necessary hardware upgrades to ensure efficient extraction without any performance bottlenecks.

By following these best practices, you can streamline the process of pulling website data into Excel. Remember to adapt these practices as per your specific requirements and the websites you’re extracting data from. Now you can confidently extract and analyze website data in Excel with ease and accuracy.

In conclusion, pulling website data into Excel is a valuable skill that can streamline data analysis and reporting processes. With the help of powerful functions and tools like Power Query and Web Queries, users can extract information from websites and import it directly into their Excel spreadsheets. This ability allows for efficient data consolidation, manipulation, and visualization, saving time and effort for professionals in various fields.

By following the steps outlined in this guide, users can harness the power of Excel to conveniently access and work with real-time data from the web. Whether it is for market research, financial analysis, or any other data-driven tasks, pulling website data into Excel opens up a world of possibilities and empowers users to make informed decisions based on accurate and up-to-date information. So go ahead, give it a try! Start exploring the endless possibilities that arise when you combine the power of Excel with the vast resources available on the internet.

FAQs

1. How do I pull website data into Excel?

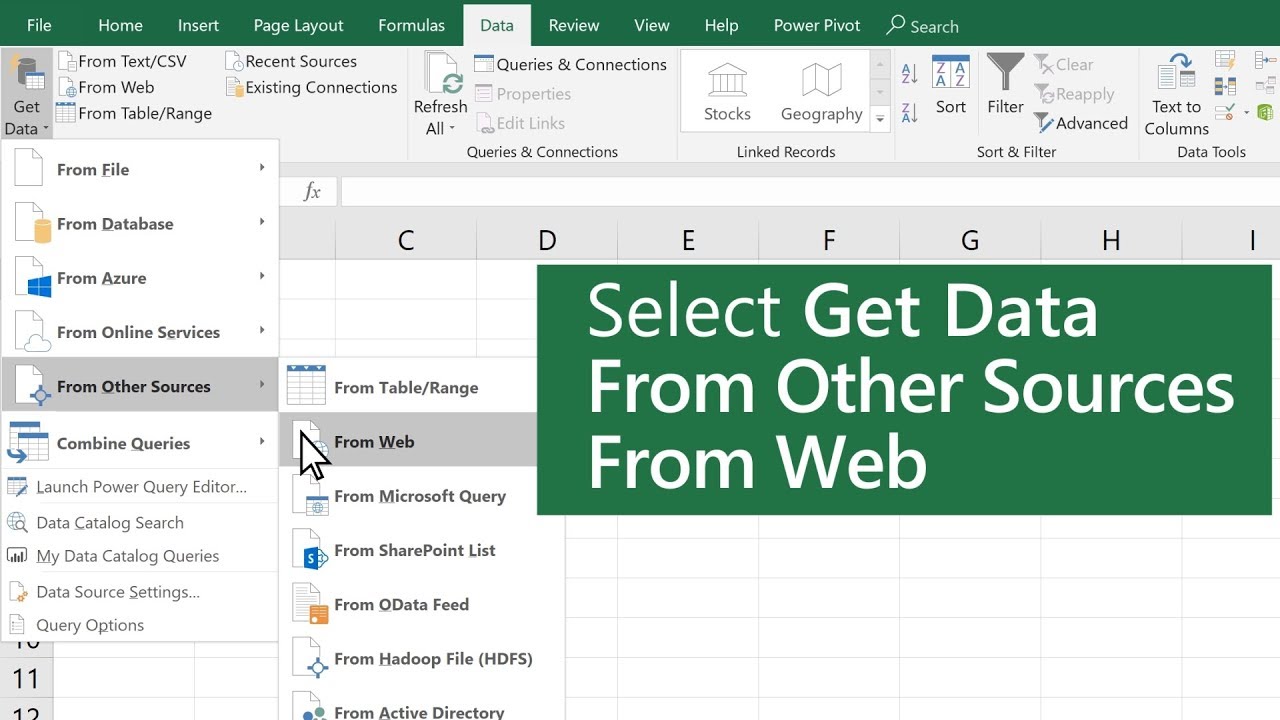

To pull website data into Excel, you can use the “Get Data” feature available in newer versions of Excel. Here are the steps to follow:

- Open Excel and navigate to the “Data” tab.

- Click on “Get Data” and select “From Web”.

- Enter the URL of the website from which you want to pull the data.

- Excel will connect to the website and display a preview of the data.

- Select the table or data you want to import and click “Load” to import it into Excel.

2. Can I pull data from any website?

While it is possible to pull data from many websites using Excel’s “Get Data” feature, some websites may have restrictions in place that prevent the extraction of data. Additionally, websites that require authentication or have complex JavaScript elements may pose challenges when trying to pull their data into Excel. However, in most cases, you should be able to extract data from websites using this feature.

3. Are there other methods to pull website data into Excel?

Yes, there are other methods available to pull website data into Excel. One popular method is to use web scraping tools or libraries like Python’s Beautiful Soup or R’s rvest. These tools allow you to programmatically extract data from websites and save it into a format that can be easily imported into Excel. Another method is to use API integration, if the website provides an API to retrieve data, which can be directly imported into Excel.

4. What can I do with the data once it’s in Excel?

Once you have pulled website data into Excel, there are plenty of possibilities. You can analyze the data, create charts and graphs, perform calculations, apply formulas, and generate reports. Excel provides a wide range of features and functions to help you manipulate and visualize the data to gain insights and make informed decisions.

5. How often can I update the data in Excel?

The frequency at which you can update the data in Excel depends on how the data source is updated. If the website you pulled the data from updates regularly, you can set up a refresh schedule in Excel to automatically update the data at specific intervals. However, if the data source is not updated frequently or requires manual action to update, you will need to manually refresh the data in Excel to ensure you have the most up-to-date information.